CDP vs Data Warehouse: What's the difference?

Learn the differences between a CDP vs data warehouse, and how you can use both in tandem to configure an architecture that makes sense for your data and business needs.

The Customer Data Platform (CDP) space has grown significantly in the last few years, with many brands beginning to implement CDPs as the foundational infrastructure of their growth stack.

When initially learning about CDPs, however, some may find themselves asking, “What’s the big deal, doesn’t our data warehouse already do that?!”

The confusion lies in the fact that both systems ingest and store data from multiple sources, and allow stakeholders across various teams to access that data. A closer look, however, reveals that data warehouses and CDPs are fundamentally different tools and that they are not mutually exclusive. In fact, they can be used in tandem to accelerate time to value.

What is a data warehouse?

As defined by Amazon Web Services (AWS), a data warehouse is a central repository of information that can be analyzed to make more informed decisions. Data warehouses collect processed data from transactional systems, relational databases, and other sources in batches and organize it into databases.

Product, Analytics, and Data teams use applications such as business intelligence (BI) tools and SQL clients to access and analyze data within the data warehouse. The value of data warehouses is in their ability to collect, organize, and store large amounts of data in a way that is easily accessible to these applications’ reports, dashboards, and analytics queries.

Benefits of using a data warehouse include:

- Better access to data for informed decision making

- Consolidated data from many sources

- Historical data analysis

- Separation of analytics processing from other “upstream” systems, such as transactional systems, which improves the performance of all systems

Examples of leading data warehouses are Amazon Redshift, Snowflake, and Google BigQuery.

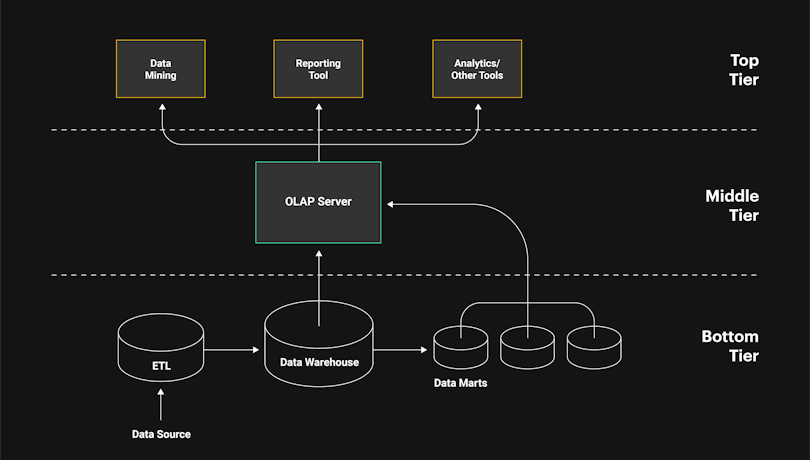

What does a typical data warehouse architecture look like?

Data warehouse architecture is often broken into three tiers. The top, most accessible tier is the front-end client that presents results from BI tools and SQL clients to users across the business. The second, middle tier is the Online Analytical Processing Server (OLAP) that is used to access and analyze data. The third, bottom tier is the database server where data is loaded and stored. Data stored within the bottom tier of the data warehouse is stored in either hot storage (such as SSD Drives) or cold storage (such as Amazon S3) depending on how frequently it needs to be accessed.

What is a Customer Data Platform?

A Customer Data Platform (CDP) is a real-time infrastructure that collects a company’s customer data from across sources in real time, validates it against an established data plan, creates a continuous 360-degree customer view, and streams data to external tools and systems via packaged integrations to power marketing, advertising, customer support, and analytics use cases.

CDPs support Developers, Product Managers, and Marketers by making it much easier to understand customer engagement and power real-time customer experiences. With a CDP in place, developers can spend less time working on vendor implementations and managing third party code, and Product Managers and Marketers can access the real-time data they need, where they need it.

The benefits of a Customer Data Platform include:

- Increased access to real-time customer data for non-technical stakeholders

- Improved customer data quality throughout the martech stack

- Simplified data governance processes and increased data security

- Faster data activation for better data-driven personalization across channels

- Less engineering hours spent working on vendor implementations and managing third party code

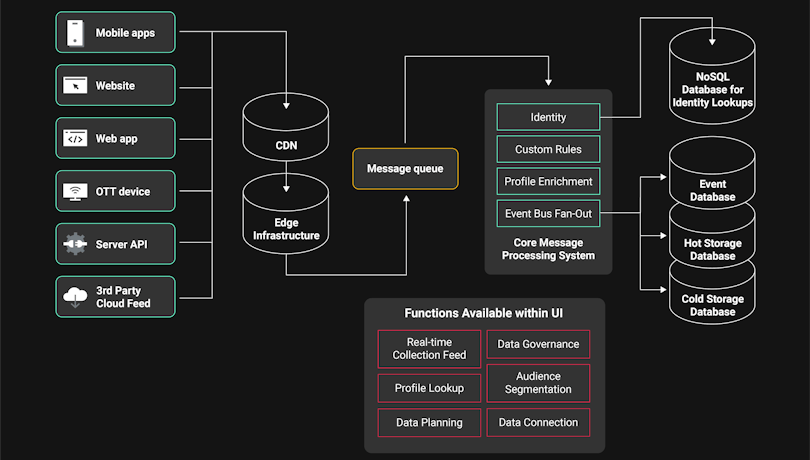

What does a typical CDP architecture look like?

CDPs collect first party, individual-level customer data from across your business digital touchpoints and servers (mobile app, website, OTT, S2S data feeds, and more) via API connections and/or SDK implementations. This data is then processed and standardized (transformation, enrichment, validation) to make it easy to integrate with external tools and systems. As data is collected, a real-time view of incoming data is available within the UI so that users across your organization can monitor activity. Customer data is then stored for the long term in different data repositories depending on the type of data and the intended purpose. Functions such as profile lookups, data quality management, audience segmentation, and data connection are available within the CDP’s UI, enabling users to activate customer data.

CDP vs Data Warehouse: So what's the difference?

Although some have argued that the functions of the CDP can be built within the data warehouse—an approach referred to as the "Composable CDP"—the reality is that the CDP and the data warehouse are two fundamentally different systems that are at their best when used in sync.

Data warehouses provide a system for long-term data storage and analysis. They are able to house vast quantities of data at a reasonable cost, and support a diverse range of data types including customer records, SKU information, employee records, and more. Due to their long-term storage and flexibility, data warehouses often serve as the system of record for enterprise organizations, enabling data teams to develop a storage system that makes sense for their unique data requirements.

While the data warehouse functions as the system of storage, the CDP functions as the system of movement in a company's data stack. CDPs make is possible for non-technical data consumers to access a continuous stream of high-quality customer data, whether ingested from customer touchpoints, such as mobile apps and websites, or the data warehouse, and activate it to power real-time personalization. CDPs provide value by generating insights and enriching data with additional customer context in transit so that business teams can activate data effectively without high levels of technical support.

By integrating the data warehouse with the CDP, as is possible with mParticle Warehouse Sync, teams have the ability to configure an architecture that enables both the data team to develop a custom system of record and business teams to access real-time customer data that they require to deliver modern customer experiences.

Latest from mParticle

Try out mParticle

See how leading multi-channel consumer brands solve E2E customer data challenges with a real-time customer data platform.

Startups can now receive up to one year of complimentary access to mParticle. Receive access