Generate in-warehouse predictive audiences

Learn how Jayant Subramanian, data science intern, developed a proof-of-concept machine learning pipeline for predicting user behaviors from data pre-processing to model training and beyond using Snowflake and Apache Airflow.

Jayant Subramanian worked for mParticle as a data science intern during the summer of 2018 and a part-time intern in 2020, focusing mainly on preliminary R&D of mParticle's machine learning infrastructure. He recently graduated from Columbia University with a major in Mathematics-Statistics and a minor in Computer Science. He is currently a data scientist at Facebook NYC, where he works in AI/ML analytics.

During my time as a data science intern at mParticle, I worked on preliminary R&D of machine learning tools that can leverage mParticle’s access to data positively for clients. The goal of my project was two folds. First, research on effective ML algorithms that work well on mParticle's data, and second, develop a proof-of-concept ML pipeline that could either run in mParticle or mParticle's clients' own environment.

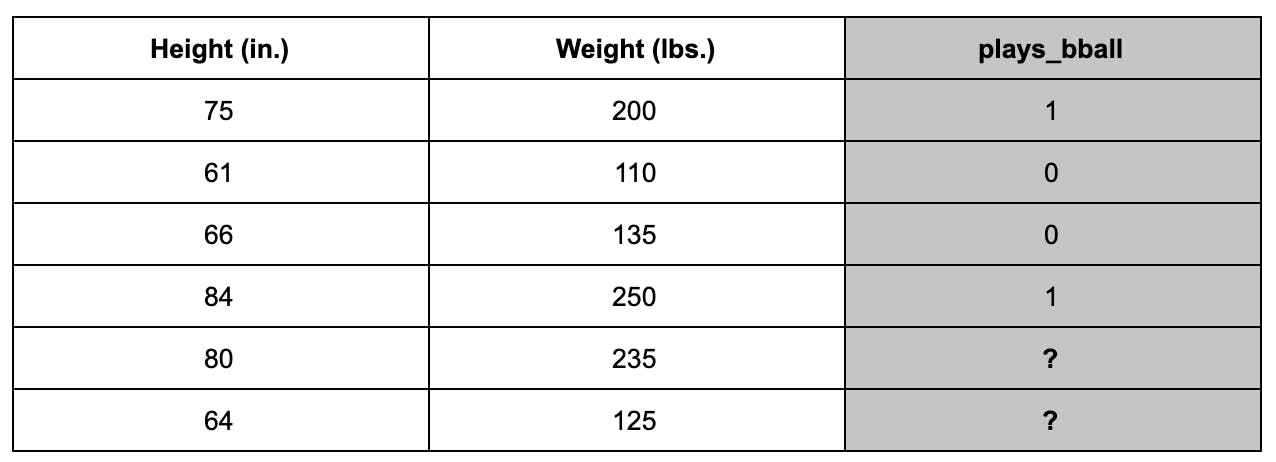

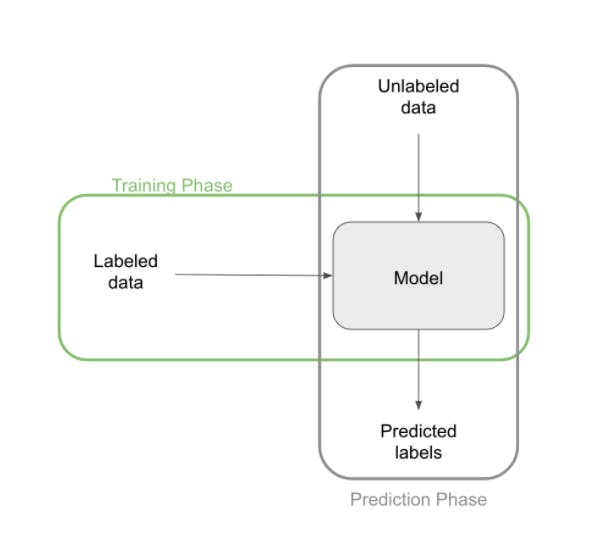

Machine Learning is a broad field that encompasses many mathematical and computational methods to derive insights and patterns from data. The specific branch of ML that my work dealt with is known as supervised learning. In supervised learning, we are given a set of labeled examples, each with its own associated features. Our goal is to use these examples to train a model that will be able to accurately predict the label for a new set of examples with unknown labels. For example, imagine we were trying to predict whether someone played basketball based on their height and weight. We are given a few labeled examples:

Here we call height and weight features and plays_bball the label. We would use the first 4 rows of labeled data to train a model, and then use the trained model to predict the label for the last 2 unlabeled rows.

Considering the amount of data mParticle processes every day, we could make use of supervised learning techniques to deliver additional insight, predictions, and analytics to our clients. Most of these prediction tasks take the form of predicting the behavior of users:

- How likely is a user to churn?

- How likely is a user to make a purchase?

- How likely is a user to perform some goal event within some time frame?

However, in order to make informed predictions about users, we need to compile features on a user-level, rather than an event-level, which is what most of our data is currently collected as.

Research

My first summer at mParticle was devoted entirely to research, exploring what types of helpful and informative user-level features we can construct from mParticle’s data schema, and which of these features are good predictors of important events like churns and purchases. With regard to mParticle’s schema, there are two main types of features:

- Quantitative Features - features that have useful numeric representations by default (event_length, event_ltv_value, session_count, revenue_per_transaction, etc.). These features or basic functions of these features can be directly used in most prediction tasks since they can naturally be represented in Euclidean space.

- Categorical Features - features that don’t have useful numeric representations in their natural form (message_type, event_name, brand, country_code, etc.). These types of features need to be transformed into quantitative features in order to use them to do machine learning. Even features like message_type which are naturally numeric can be considered categorical if their numeric representations are not indicative of the feature value itself. For instance, a message_type of 2 does not have half the inherent value of a message_type of 4. In fact, 2 and 4 indicate entirely different events!

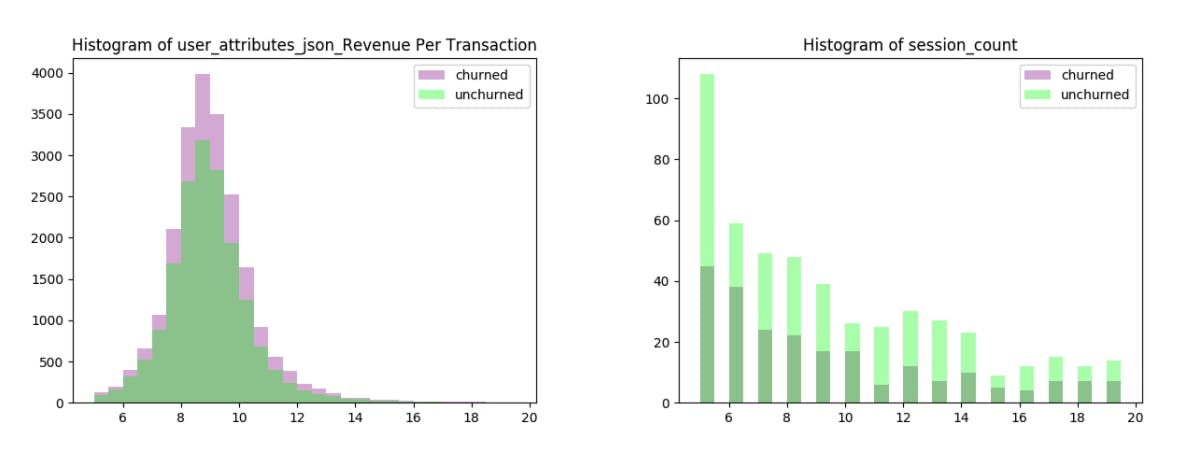

When dealing with quantitative features, we often want to transform them into distributions that are easy to analyze, like a Gaussian or Poisson. Sometimes, the features are naturally distributed in an informative way:

As you can see in the above figures, the revenue_per_transaction and session_count features are distributed nicely (Gaussian and Poisson, respectively). However, it is unclear as to whether they will be helpful to predict churn, as the churned user behavior seems distributed very similarly to the unchurned user behavior.

Other times, we have to manually apply transformations to the data to force it into a useful distribution. These transformations can often be found intuitively with some knowledge of statistics.

When dealing with categorical features on the other hand, there are countless ways to represent features. We can represent them as numeric dictionaries (kind of like the way message_type is already distributed), but then we run into the problem described above, where the representation value does not accurately reflect the feature category. We could also create dummy variables, encoding each category onto its own separate column:

This solves the representation value problem, but creates an entirely new problem, namely that we create a LOT of new features, and the features we create are very sparse, meaning that not very much information is encoded in them. Furthermore, research has shown that sparse features are often (but not always) poor predictors in machine learning problems.

Through my initial summer, I developed a set of classifiers that were able to accurately predict churn and purchase events (between 85-95% accuracy depending on the problem). The best-performing models were tree-based ensemble models like shallow random forest and gradient boosted decision trees, although simpler models like vanilla decision trees, 50-nearest-neighbors, and logistic regressions (with thresholding) performed quite well on most prediction tasks. For a brief intro to what some of these models actually do, you can visit this link.

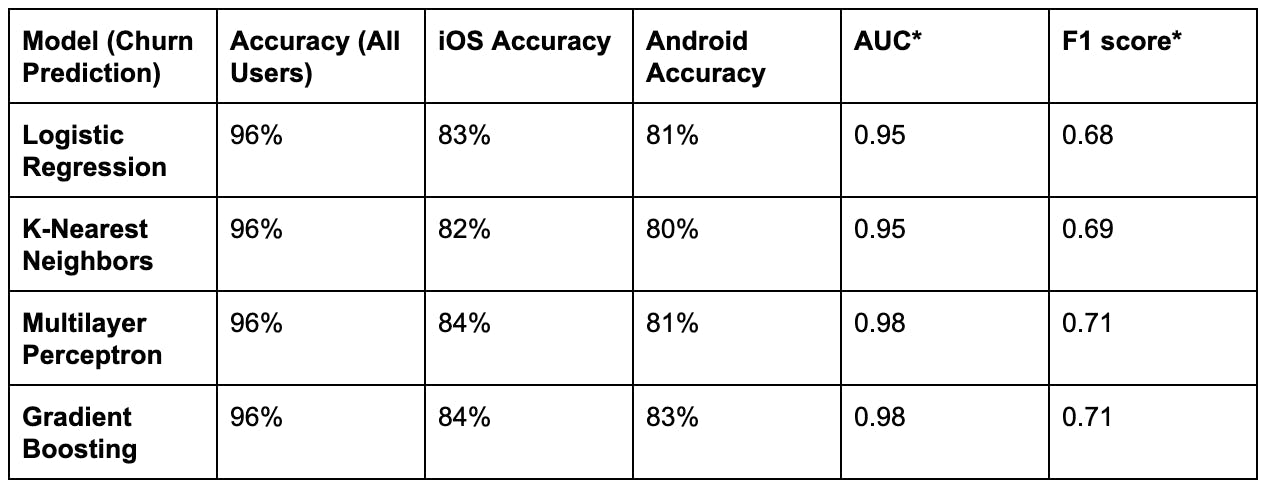

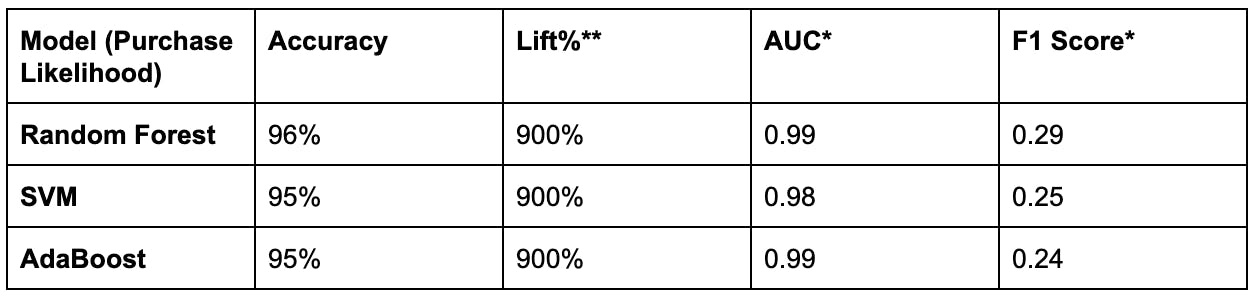

I worked with one of mParticle’s clients to test the suite of models on real production-caliber user data, and through testing, I found that dummy variables with some thresholding modifications were the most viable solution to dealing with mParticle’s categorical features (dummying variables tended to increase model accuracy by 5-10 percent across the board), and I also found out that mParticle deals with WAY too much data for any ML infrastructure to be efficient locally. Some high-level results for some of the well-performing models for each task are shown below:

*AUC and F1 score are measures of how precise a model is (how infrequent false positives and false negatives are). A score close to 1 indicates a low false positive and false negative rate (a good model). These are necessary metrics to tune for in these types of cases since about 80 percent of users for this client churn after less than 5 uses, so predicting that everyone churns would yield a reasonable accuracy, but very low F1 and AUC scores.

**Lift% in this instance is a measure of how much more likely to make a purchase a user is than the average user, given that the model predicted they would make a purchase.

So Machine Learning works! We see that ML predictions are fairly accurate and have very few false positives/negatives, even for imbalanced problems such as these. They also vastly outperform rule-based benchmarks in all major metrics. Now we just need to deliver these predictions to clients in an automated way.

Production

Now that we have established a set of features that seem to predict user behavior well on real data, how can we roll machine learning out as a product to clients in a scalable, efficient, and automated way? Our first step is designing a workflow that can answer these three main questions:

How do we deal with preprocessing enormous amounts of raw data into our desired features?

Some of mParticle’s clients store on the order of millions (or even billions) of rows of event data in their warehouses. Being able to wrangle and manipulate this amount of data efficiently through an external ML pipeline would require a massive amount of resources: countless servers to run the whole workflow on clusters, external storage for each client’s feature data, and a lossless pipeline to extract the data from warehouse, run distributed ML, and feed the results back into the warehouse. If only we could leverage a tool that was capable of storing and manipulating large amounts of data… How about the data warehouse itself!? We can just process the data and run the model within the warehouse, giving us the perfect set of tools to deal with the volume and format of the data. This tool is built to run in Snowflake warehouses specifically, but can easily be extended to other warehouses with minimal code modification.

What kinds of models can we efficiently implement and run on such a large amount of data?

Now that we have decided to run our machine learning tool in-warehouse, we have some limitations on types of models we can implement and run efficiently. Since we are restricted to SQL to run tabular operations on our data, things like matrix multiplication are out of the question. Ensemble models such as random forest and boosting methods would be too computationally expensive. Also, nonparametric models (models that predict directly from data rather than learning prediction parameters) would be difficult to run and exponentially more expensive to train because of the amount of new data that arrives every day. This leaves us with basic parametric models like Naive Bayes and Decision Trees. Naive Bayes is easy to implement in SQL using UDFs, it trains faster than decision trees on tabular data, and we can store the parameters in a small table for fast inference, whereas with Decision Trees we would need to store all the nodes of the tree, which is very expensive when dealing with high-dimensional data. Although Naive Bayes was not one of the main models explored through my research, the fact that all of the models we tested achieved high performance indicated that the prediction problem itself generalized quite well to many model classes, so we would expect Naive Bayes to achieve relatively high performance as well. Naive Bayes is also well known for being effective on practical data sets.

How do we incorporate new data into our existing features and model?

Now that we have decided to run our tool in-warehouse, this is a pretty simple question to answer. Our features are aggregated by users over multiple days, so we can trigger a job each day to process the previous day’s data in a staging table and use a MERGE query to merge the staging table into our final feature table. These jobs can be triggered using a simple scheduler. This tool is built on top of Apache Airflow, and uses airflow to manage tasks.

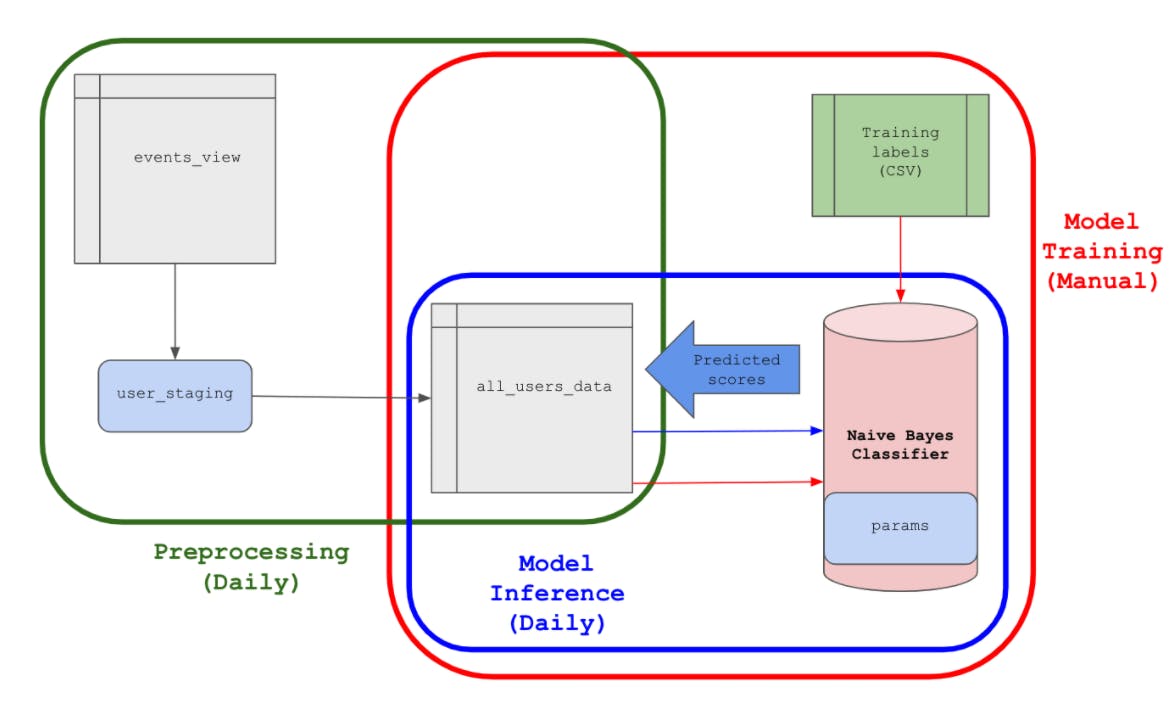

Based on the answers to our questions, I designed a workflow that looks like this:

here are 3 main aspects of this workflow, all of which are centered around our final feature table: all_users_data. Each of these aspects are handled entirely in the client’s data warehouse, from initial preprocessing to final model inference. These three aspects are individually detailed below:

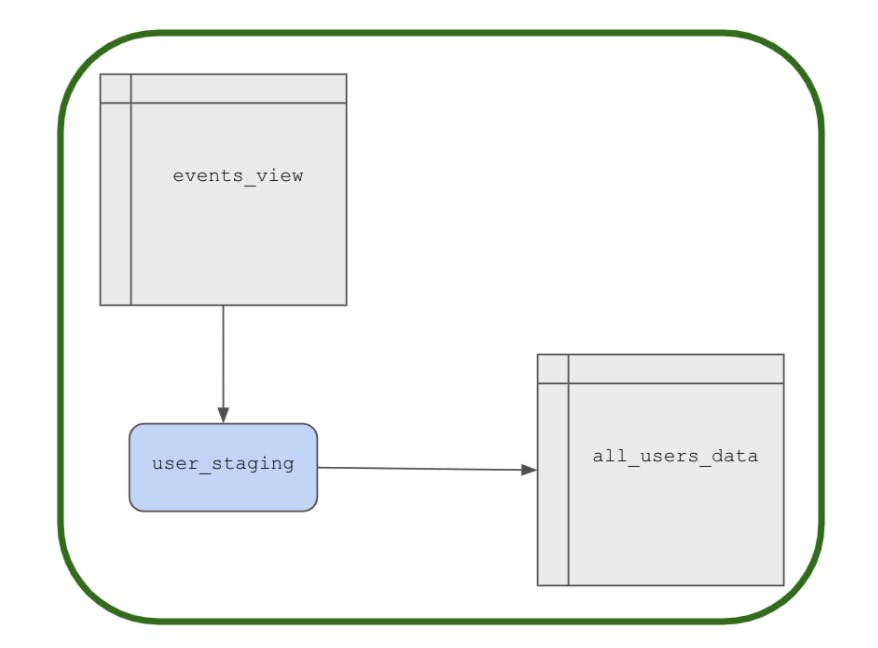

Preprocessing

The preprocessing pipeline deals with converting raw event data into model-usable user-level aggregated features, as well as updating the features regularly as new data is collected. This pipeline consists of 4 sequential tasks, which are run at the beginning of each day:

- Extracting previous day’s raw quantitative features (eventlength, eventltvvalue, etc.) from events_view and aggregating them into user-level quantitative features like totaltimespent, avg_session_length, etc. These user-level quantitative features for the previous day are stored in a staging table called user_staging.

- Extracting the previous day’s raw categorical features (custom ones can be specified by the client in the config files), encoding them into dummy variables, and aggregating them by user. We saw earlier that dummying categorical features like eventname, brand, and countrycode is an effective way to deal with non-numeric features in our case.

- Joining these user-level categorical dummy features into user_staging. Now user_staging contains all the fully processed feature data from the previous day.

- Merging user_staging into all_users_data, our final feature table.

In order to automatically trigger this string of tasks each day, we use Apache Airflow. Airflow provides an easy-to-use scheduling and task management infrastructure that we can use to backfill our table and handle failed tasks. It even provides a web-hosted UI to control our task DAG and manually trigger new runs.

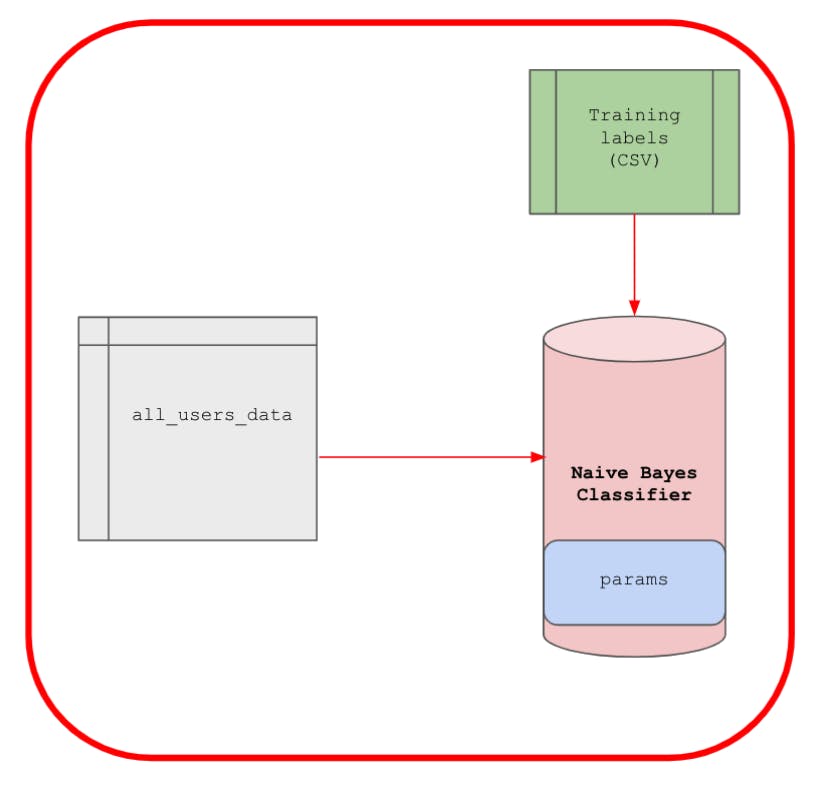

Model training

Now that we have a framework in place for wrangling and preprocessing the raw data and a self-updating feature table, we can train a classifier to solve a prediction problem. Since this is a supervised task, we need labels on our data. For increased flexibility on the types of problems this tool can support, the client can provide a CSV of mparticleuserid-label pairs to train the model on. Since our Naive Bayes model is a parametric model, the whole model is defined by a set of learned parameters (the mean and variance of the individual feature class conditionals), which are stored in the params table. If you want more information on how Naive Bayes works, this blog post details it pretty well.

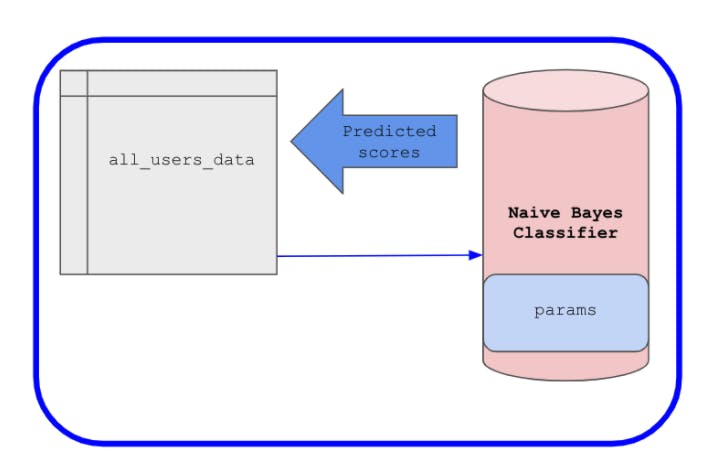

Model inference

Once the model has been trained on some labeled subset of users (provided in the CSV), we want to be able to use our learned parameters to predict the label of any given user. Since the latest parameters are already stored, we can predict the label for any user whose data is present in all_users_data at any time. However, the data in all_users_data changes every day, so our predictions for any given user could change day-to-day. That means we should repredict whenever the data changes, in other words, we want to re-predict the labels for all the users in all_users_data every day. This is achieved simply by adding model inference as a fifth step in the Apache Airflow processing pipeline: once the features in all_users_data are updated, we can use the current values stored in params in addition to the updated features to predict labels for each user and return them back to all_users_data. That means that predictions for any given user are not only easily accessible via query from all_users_data, but also that they are always based on the most recent data.

Conclusion

And there you have it: a production machine learning tool! There is obviously a lot of room for growth in this tool. It could be really helpful to add more models and provide automated model selection depending on the task, or to further facilitate labeling to the point where clients wouldn't even have to input their own training labels: they could simply select a goal event (or input their own query for custom tasks) and have the tool automate labeling and retraining. We could also add support for training different models on different audiences to provide even more personalized results. Machine Learning could truly take CDPs to the next level, and I'm excited to see how mParticle will use it next!