Navigating the CDP Noise: It’s time to move beyond dumb pipes

In part two of this two-part series, mParticle CEO and co-founder Michael Katz outlines four requirements brands should keep in mind when evaluating vendors to help them leverage their customer data.

In part one of this series, we looked at the false narratives that are being peddled by savvy product marketers.

The value of customer data is realized when that data is seamlessly put to work to help deliver value to your customers, and ultimately your business. In order to deliver value, teams must ensure that the data being moved is of high quality, has meaning and context, and can be easily leveraged with any preferred ecosystem of tech partners.

With the advent of the modern data stack, there have been many new entrants into the ecosystem. Having been one of the companies defining the customer data space we believe there are a new set of requirements that businesses should think about when selecting vendors to help them leverage their customer data.

We believe any business evaluating a platform to help activate customer data must focus on four key points of value:

- Protecting the business by ensuring compliance with privacy laws

- Simplifying the process of troubleshooting to eliminate data downtime

- Improving the understanding of the customer journey

- Accelerating the movement of customer data throughout the stack

Data Quality Matters!

Without high quality data as a starting point, the rest doesn't matter. Intelligent pipes begin with a commitment to maintaining and protecting data integrity and consistency through data cataloging, schema validation, and rules around remediation of bad, missing, or misconfigured data. The output is clean data that can power the great applications, and power personalized experiences across all channels. This not only helps ensure sustainable value, it also reduces the total cost of ownership.

For example, teams unifying data across various consumer touchpoints to dynamically calculate a rolling customer lifetime value need to make sure that the data has consistent nomenclature across platforms, and the identities are merged properly.

Data downtime is where value is destroyed

In terms of data movement, entropy is inevitable. Murphy’s law states that whatever can go wrong, will go wrong. Given lots of can happen which create data breakage and/or data downtime. This is effectively a rate of change problem, where there are so many internal and external changes that mistakes are made, resulting in latency or downtime. Visibility into where bottlenecks or breakage exist is critical for reducing data downtime, and remediating issues which impact the business. Observability is not just about peace of mind, it’s about improving ROI and reducing total cost of ownership.

For example, if a marketing tech vendor API is experiencing latency, Events are taking awhile to load, or audience calculations are running slowly, teams should know exactly where in the pipeline there are issues. Troubleshooting blind may take days or weeks. Troubleshooting with full transparency and visibility reduces time to resolution by orders of magnitude.

The full continuum of customer insights

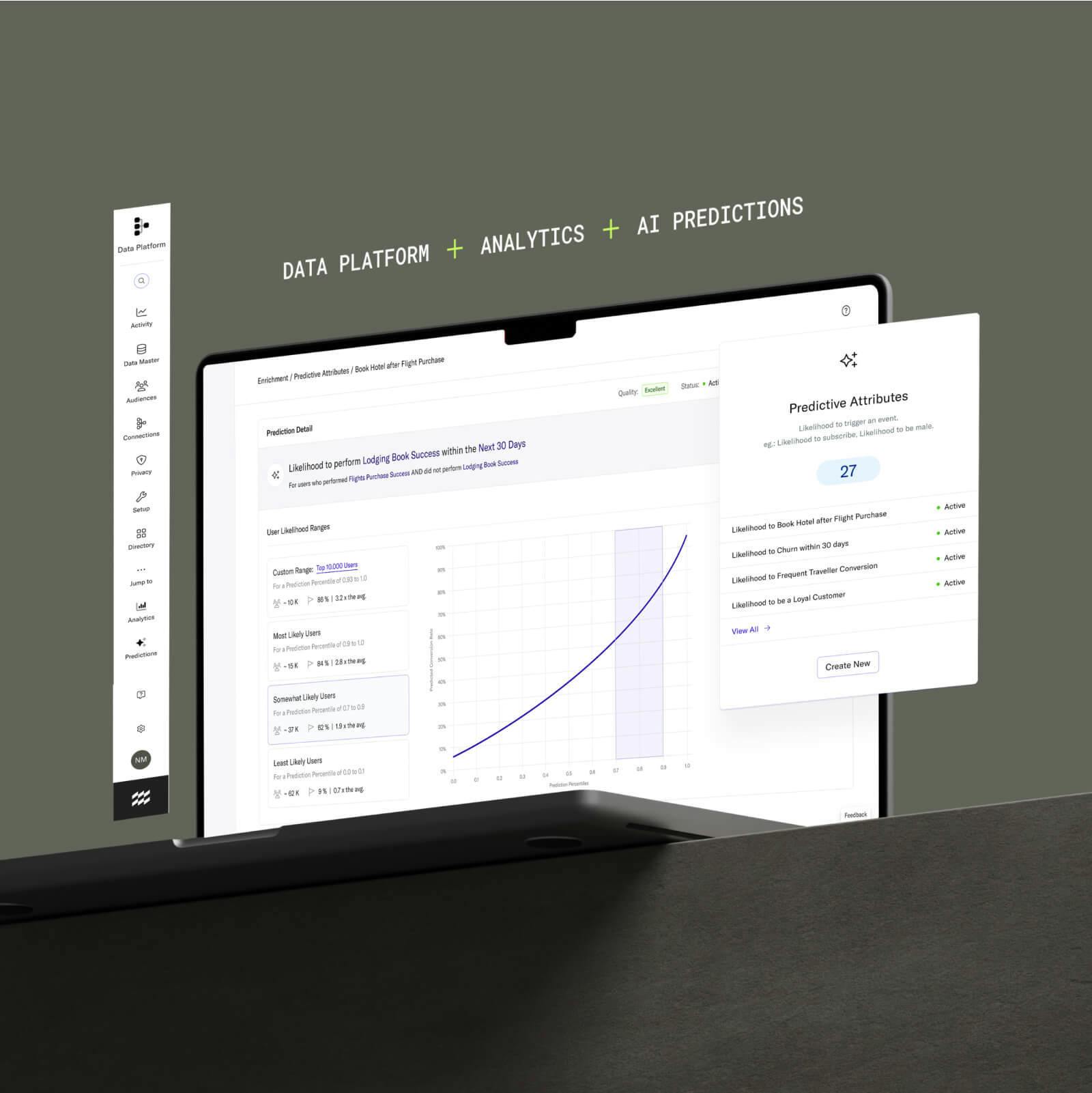

Intelligent pipes leverage both predictive and rules based approaches to enrich customer data and inform the smart movement of data, allowing teams to move beyond batching or streaming large amounts of data to lots of different marketing tech applications. While many applications will offer insights specific to their tool, the value of centralizing insights into an intelligent pipe is that they can be connected to more channels than any one single app will offer. By enriching the customer data in transit with more context, not only are you improving your ability to get more value out of the downstream applications, you’re also unlocking new opportunities that wouldn’t be possible otherwise.

For example, building a predictive audience of all users who are likely to make a purchase in the next 30 days and connecting that across channels such as Facebook and Attentive, and if they don’t respond in the next 7 days, connecting that audience to Iterable, means that you have complete control over how and when you want to engage a user with an offer on any channel.

Speed matters!

In 2023, audience segmentation is a table stakes capability for all CDPs and reverse-ETL solutions to execute targeted campaigns and personalized experiences. We see two defining differences across the universe of vendors:

- Is the system real-time or batch based?

- Does the system offer logic to create the sequencing of audience construction and connection?

Speed matters — marketing in the moments that matter is the difference between success and failure. The single biggest limiting factor beside bad data is trying to use stale data. In batch scenarios, oftentimes the targeted customer has already bought the product, or performed the action that was the goal of the campaign. But for many streaming systems there are system limitations on the capabilities, such as shortened look back windows.

Sequencing is a vital tool for sophisticated marketers who want to execute cross-channel campaigns. As we all learned in fifth grade math class, order of operations matters and that is very much the case with Audience segmentation and activation.

For example, with a journey orchestration tool, if a user performs some action followed by a subsequent action, they get added to an audience segment, which can be connected to various engagement tools like Facebook and Iterable. But if they take a difference sequence, they're added to a different audience and send them to Google and Attentive.

By upleveling to intelligent pipes, teams can automatically identify patterns, anomalies, and trends within the data, perform real-time analysis, make data-driven predictions, and enable intelligent decisioning. Intelligent pipes are the path to improve the efficiency of compute cycles proc, derive valuable insights, and take actions based on predictions and calculations.

Takeaway: we need to stop focusing on optimizing features of first generation systems — the industry equivalent of building a “faster horse.” Let’s evolve, taking a big step forward into the future and embrace the next great innovation in this space, a customer decision engine built on intelligent pipes.