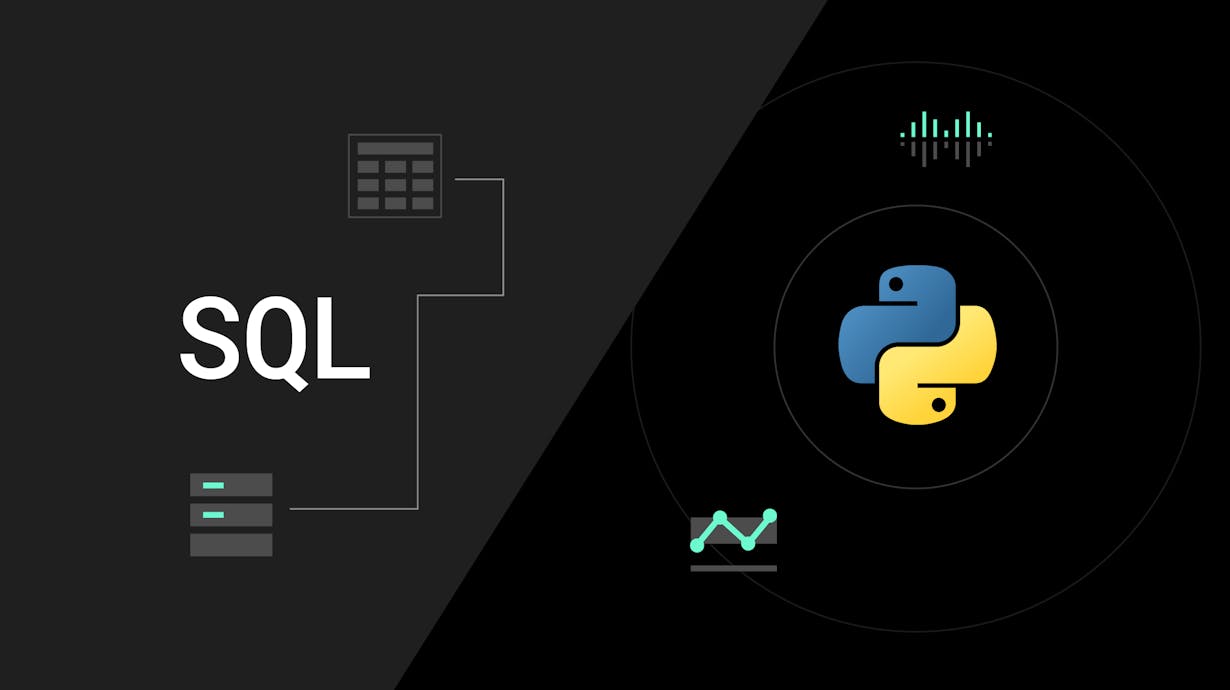

Python and SQL: Complementary tools for complex challenges in data science

While Data Scientists today have an ever-expanding list of toolkits, languages, libraries and platforms at their disposal, two mainstays––Python and SQL––are likely to remain staples of data analysis for years to come. Here, we’ll look at the role these languages play in the rapidly evolving field of Data Science.

If you’re reading this piece, odds are you’re well aware that data is a big deal in the world today. As consumers, we produce it with each click of the mouse and tap of the screen. As technologists, we think about the best ways to leverage it to improve products and drive decisions. And as human beings, we hope it can keep us healthier and safer, and bring more clarity to the decisions we make every day. The concept of “big data”––once an emerging trend and now a cliche––seems to have a connection to every article we read, every ad we see, and every email that lands in our inbox.

When data got “big,” so did Data Science––an interdisciplinary field that draws on traditional computer science as well as old and new programming tools, and uses the scientific method to extract meaningful insights from structured, semi-structured and unstructured data. Like traditional software engineers, data scientists rely heavily on knowledge of fundamental concepts in computer science, and deal with algorithmic challenges like managing trade-offs between time and memory. While traditional software engineers focus on building applications for end users, however, data scientists manipulate large sets of data and uncover actionable knowledge, which require supplementary skill sets within mathematics, statistics and analytics.

“Data Scientist” consistently tops lists of the fastest growing, highest paying and most fulfilling jobs, both within the technology industry as well as in sectors including energy, financial services, public health and government. Back in 2012, Harvard Business Review dubbed data science the “sexiest job of the 21st century,” and the field lived up to this swooning superlative over the nine years that followed, thanks to trends such as expanding cloud infrastructure and the growth of Machine Learning and AI fueling continuous job growth. Nearly a decade later, the outlook for data scientists is just as bright, as the U.S. Bureau of Labor Statistics predicted 31% job growth between 2019 and 2029.

There is no doubt that the complexity of information that Data Scientists handle will continue to expand, so it’s natural to expect the tools and technologies at their disposal will evolve along with the tasks at hand. While keeping up with emerging skill sets is par for the course for any engineer, there are certain mainstay technologies that promise to remain relevant to the processes of retrieving, manipulating and deeply analyzing data for years to come. Two of these tools are SQL (Structured Query Language) and Python. Here, we’ll take a look at why these two languages remain integral tools for data analysis, the roles they each play in Data Science, and which tool to use depending on the use case.

SQL: A time-honored tool for data retrieval

Structured Query Language (SQL) has been a cornerstone of data analysis since its introduction in the 1970s shortly after the development of relational databases. SQL is a richly featured language that allows users to manipulate data in very specific and targeted ways, and delivers the ability to perform the gamut of “CRUD” (create, read, update and delete) operations on data stored in tables within a Relational Database Management System.

While SQL is an excellent tool for extracting data from a database, it is limited in what it can do with data once it has been retrieved. Transforming and manipulating data in complex ways, and performing higher level analyses that are common practice in modern data science such as regressions, trend lines, statistical analysis and working with time series datasets would be challenging, if not altogether impossible using SQL.

The Python ecosystem: Expansive and versatile tools for data analysis

Python is one of the most popular programming languages in the world, thanks to its versatility, developer-friendly conventions, and broad ecosystem of helper libraries that span a wide range of use cases. Python also has a very active and supportive community maintaining the language and contributing to open-source projects, including a sizable group that leverages it to draw actionable insights from data.

Comparing SQL and Python for querying data

Before we dive deeper into what Python contributes to the ecosystem of data science tools, let’s compare it to SQL in performing a data querying operation. Before Data Scientists are able to perform any complex analysis on data, they need to retrieve it from wherever it is stored, and render it into a usable format. To perform this task with Python, most data scientists would probably use the Pandas library. SQL, on the other hand, can do the job of retrieving data without the aid of extensions or libraries. In fact, comprehensible syntax that reads almost like plain language combined with the ability to perform complex data retrieval and aggregation is a central reason why SQL remains an integral tool for data scientists.

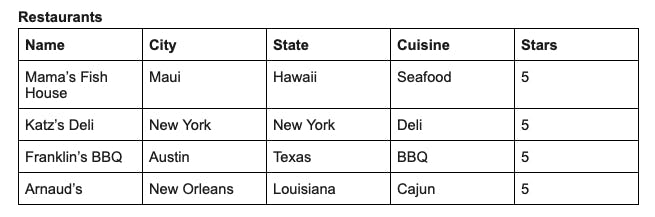

Say we have the database table below called restaurants, which represents information about several restaurants in cities around the United States:

If we wanted to select all records from this table in the city of Maui, the SQL query to do so would look like this:

SELECT * FROM Restaurants

WHERE City=”Maui”;If we were to perform the same query with Pandas––a popular Python library for querying data––there would be a few more steps involved, and our query would not be as immediately readable. First, we would have to import the Pandas library itself, then import the data from the relation into a `DataFrame`, then finally we would be able to run our lookup and return data.

That process would look something like this:

# Import Pandas library

import pandas as pd

# Import the table as a DataFrame (assuming the table is stored as a CSV)

restaurants = pd.read_csv(‘restaurants,csv’)

# Select all rows in restaurants where city is equal to “Maui”

restaurants.loc(restaurants[‘City’] == ‘Maui’)The SQL version of this query is undoubtedly more comprehensible and concise, which is an important aspect of any software or data analysis project in which large teams of developers will be working together. This is especially useful when performing precise and complex queries in which multiple tables are joined together, and record inclusion in a selection is based on multi-layer conditional statements. Now in its fourth decade of existence, SQL remains an essential tool for data scientists for this very reason.

For Data Science applications, Python’s utility largely begins where SQL’s ends––that is, once the right dataset has been retrieved, Python can step in to perform complex statistical analysis, create visualizations, and enable a wide variety of other functions having to do with processing, manipulating, and experimenting with data. Python’s libraries for data analysis are excellent for handling the complex mathematics and statistical analysis that underlies many processes in Data Science, allowing data scientists to spend more time exploring and experimenting rather than carrying out laborious calculations.

Python’s vast array of libraries for data science help organizations efficiently turn questions and hunches into actionable insights. To that end, let’s explore some of the most popular and powerful Python libraries that let data science explore and innovate within different areas of data analysis.

NumPy is used for various applications within scientific computing, and is especially useful to data scientists performing Machine Learning and Deep Learning processes. Its name stands for “Numerical Python,” and its powerful ability to handle vectorization, indexing, and broadcasting are invaluable to data scientists when dealing with multidimensional array objects.

Scikit-learn is an open-source Machine Learning library that is built on NumPy as well as other Python libraries for data analysis. At its most basic level, scikit-learn is a tool for building Machine Learning models. Under the hood, it contains a variety of Machine Learning algorithms like regression, classification, clustering and preprocessing, and exposes them as Python abstractions. This means that data scientists do not have to write these complex algorithms from scratch every time an analytical use case calls for them, and can instead handle this with consistent methods like:

`sklearn.linear_model.LinearRegression()`

Developed by the Google Brain team, TensorFlow has gained a reputation as a cutting edge both for Machine Learning and Deep Learning. As an end-to-end ML library with its own ecosystem of resources, libraries and tools, TendorFlow is enabling the research community to push the boundaries of what is possible in Deep Learning and ML/DL-powered applications.

Developed at Facebook, PyTorch is an offshoot of the Lua-based Torch framework, and has played a significant role in innovation within the Deep Learning space by making DL models less expensive and computationally faster.