Data quality vital signs: Five methods for evaluating the health of your data

It’s simple: Bad data quality leads to bad business outcomes. What’s not so simple is knowing whether the data at your disposal is truly accurate and reliable. This article highlights metrics and processes you can use to quickly evaluate the health of your data, no matter where your company falls on the data maturity curve.

Consumers today produce more data across more channels than ever before. In order to translate this slew of information into data-driven decisions and personalized experiences, however, companies need to deliver their customer data to an increasing number of tools for activation. In the absence of a data infrastructure tool capable of unifying cross-channel data points into customer profiles, these new activation systems often receive data independently of one another through their native APIs and SDKs.

Over time, as each of these tools collects more data points and stores them according to their own schema requirements, inconsistencies can develop between data sets living across the organization’s stack. As a result, the quality of the data at the end users’ disposal steadily declines. While information about your customers can be your company’s greatest asset, it can also be a serious liability. For example, inaccurate identifiers on customer profiles could result in “personalization” campaigns turning very impersonal. Incorrectly collected or aggregated user events could give your product team an inaccurate picture of user journeys, and send your next app feature down the wrong path. The list goes on.

Any team that depends on data also depends on data quality, and stakeholders who make decisions about the way data is collected, stored, and actived have a vested interest in prioritizing data quality from collection to activation. Unlike human beings who can seek out a doctor when they don’t feel well, however, data is not so vocal about its health. When you have a customer event with an incorrect identifier, or two profiles living in separate systems that actually belong to the same user, this data simply sits in your systems of record, waiting to be queried and used to target your customers and prospects. Many serious illnesses don’t cause symptoms until they are very advanced, and similarly, data quality problems are often not apparent until they begin causing negative outcomes for your business.

Just like humans should take control of their health by seeking preventative healthcare, companies should regularly assess the health of their customer data. In this article, we’ll look at some ways that you can do this, starting by checking high-level metrics that can serve as general guideposts. We’ll then move to more advanced processes that require involvement of data engineers and more sophisticated data infrastructure. Finally, we’ll cover a highly reliable way to assess data quality that organizations with a mature data practice can leverage.

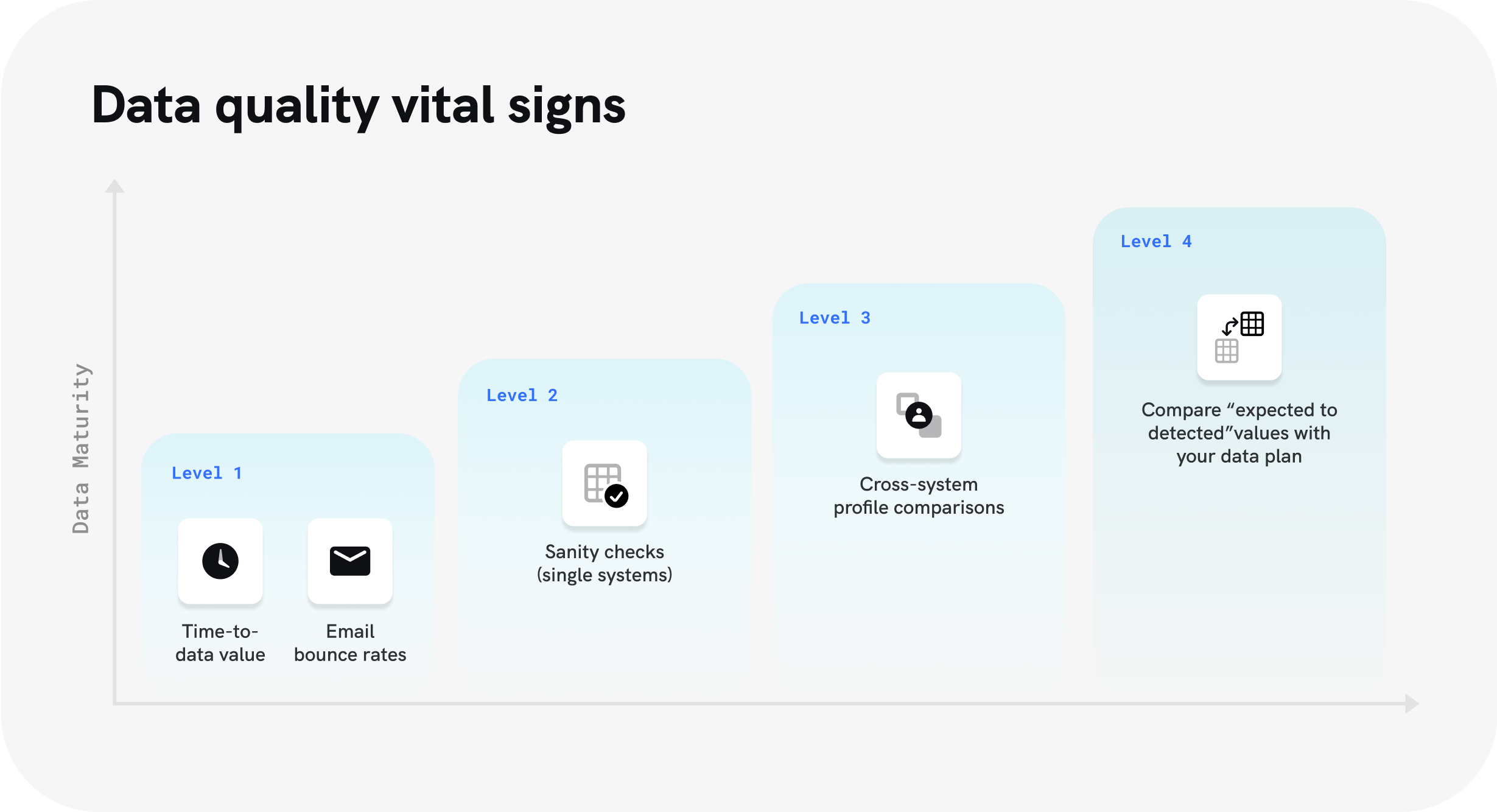

Level 1: Tracking high-level metrics

Email bounce rates

One of the simplest ways to monitor the overall quality of your data is to keep a close eye on your email bounce rates. Nearly every company maintains customer email lists and sends messages to customers with some regularity. Today’s email service providers make it easy to keep track of your bounce rates, along with other metrics like click-through and open rates. In addition to tracking the success of your email campaigns, bounce rates can also provide a window into the overall health of your data.

To get an idea of where your bounce rates currently stand, Mailchimp provides this useful set of email marketing benchmarks broken out by industry. If you find that your bounce rates are higher than the benchmarks for your industry, or if they are increasing over time, this indicates a problem with the timeliness of your data. And since out-of-date email records could also mean that other customer identifiers have gone stale as well, this issue may not be limited to the data you’re using for email campaigns. When bounce rates increase, this is a good sign to check the freshness of data living in other systems, and purge and update outdated records where necessary.

Time-to-data value

“Time-to-data” value refers to the amount of time and internal effort it takes to collect your data and leverage it to drive value for your business, and it can serve as another valuable benchmark for the overall health of your data. If the time between collecting and activating your data begins to noticeably increase, or if data engineers and analysts have increasing difficulty preparing datasets for use, it might be time to take a closer look at the data sets in question.

Many factors of data quality, including accuracy, completeness, and consistency, can result in longer time-to-data value. If records across systems are stored in incompatible formats, for instance, data engineers will need to spend more time transforming data sets to query audiences. If records are found to be inaccurate or incomplete, data sets may need to be reviewed and cleansed on an ad-hoc basis, which can significantly slow down the speed with which data can drive results.

Level 2: Directly checking data quality

Single-system sanity checks

Another method of validating data quality is to perform what’s known as a sanity check. This method of data validation requires taking a random sample of records within a database, and comparing the values, data types, and structure of this sample to a set of expectations. To perform sanity checks on customer data within an engagement or analytics tools, query a random sample of a significant number (at least 100) of user profiles from the system. Then, compare these values for an identifier on each profile––like email, for example––to what you would expect them to contain. In the case of email, how many blank email values are found? How many values contain valid addresses? If you see large numbers of blank or invalid records, this is an indication that the quality of the rest of the data in this system may be compromised, and that a more complete analysis of this data set is necessary.

Level 3: Comparing data sets across systems

Until now, we’ve discussed high-level flags that can potentially signal a larger issue with data quality. Using email bounce rates, time-to-data value, and throughput anomalies as indicators of data quality is similar to checking a patient’s blood pressure to assess a person’s health, however. While abnormal results might suggest that there is a problem that needs to be addressed, it cannot precisely diagnose this problem, nor can it prescribe a cure. For that, you’ll need to look a little deeper into your data, and employ some analysis techniques that can more confidently predict data accuracy and consistency.

Cross-system profile comparisons

In organizations that collect, process, store, and activate large amounts of data, different teams commonly rely on separate systems for their data use cases. In many cases, and especially when identity resolution is handled in individual downstream systems, these separate systems can turn into data silos that house fragmented and inconsistent copies of incoming data. For more information on how and why this problem occurs, refer to this blog post.

To determine whether this data quality issue is impacting the data sets living across your activation tools, your data engineers and analysis can perform a relatively straight forward query and comparison across systems. To do this, identify two systems in which you would expect to find the same set of user profiles––for instance, a product analytics tool like Indicative, and an engagement tool like Braze. Select a random sample of enough users to yield statistically meaningful results, and query both tools to return the same set of user profiles from each system.

Each profile in Indicative should completely match its counterpart in Braze––that is, all identifiers (email, phone number, device IDs, etc.) and user attributes (first name, last name, address, age, etc.) should be identical. While a small amount of deviance between datasets is to be expected due to inevitable data degradation in those tools, wide variations can indicate that there is a fundamental problem with data accuracy in these systems. If this is the case, you may consider running a deeper cross-system comparison and purging all inconsistent records. This will not stop the problem from developing again, however. The best way to do this is to put a robust and flexible identity resolution capability in place.

Level 4: Your data plan is your standard

Comparing “expected to detected” values

For companies that create and maintain data plans, there is a more reliable and efficient variation on the analysis described above. Rather than querying the same set of user profiles from different systems and comparing like profiles to each other, you can use your company’s data model to define the values and format you expect to see on each user profile in any given system. This allows you to check the downstream profiles against a universal standard that you have defined, which provides a higher standard of accuracy than comparing downstream profiles to each other.

An ounce of prevention is worth a pound of cure

All of the metrics and methods of evaluating data quality discussed above can help you leverage data with more confidence. However, none of them should be seen as long term solutions for data quality. To truly address this issue, companies should develop a robust data planning practice, and adopt other systems and practices that help achieve data quality at the point of collection and protect it throughout delivery and activation.