How does Snowflake work? A simple explanation of the popular data warehouse

Learn more about what Snowflake is and how it fits into your data stack.

Jumping between different data projects due to resource limitations can be frustrating and inefficient.

Yet, until recently, most businesses ran data operations this way. Engineers would often need to stop resource-intensive queries so they could mine a database for urgent customer insights. These same data teams would also frequently need to run queries across many nights, when compute resources were not in demand.

But now, thanks to a highly scalable, available, and cost-effective cloud data warehouse like Snowflake, businesses can harness their data without worrying about resource contention.

Snowflake is an elastically scalable cloud data warehouse

Snowflake is a cloud data warehouse that can store and analyze all your data records in one place. It can automatically scale up/down its compute resources to load, integrate, and analyze data.

As a result, you can run virtually any number of workloads across many users at the same time without worrying about resource contention. Workloads can include use cases such as batch data processing to interactive analytics to complex data pipelines.

Consider a typical scenario where teams want to run different queries on customer data to answer various questions. Your product team may want to understand engagement and retention, while your marketing team may want to understand acquisition costs and customer lifetime value. Running all these queries on one compute resource cluster would create competition for resources, slowing query performance for both teams. But with Snowflake, you can create separate virtual warehouses for each team, allowing all stakeholders to quickly get the answers they need.

Snowflake also automatically creates another compute cluster instance whenever one cluster is unable to handle all incoming queries—and starts balancing loads between the two clusters—so you never need to worry about downtime or slow performance.

Because Snowflake can scale on-demand capacity and performance as needed, data teams no longer need to run upfront capacity planning exercises. Nor do they need to maintain costly oversized data warehouses that remain mostly underutilized.

Snowflake’s architecture automatically allocates the right resources

Snowflake’s decoupled storage, compute, and services architecture enables the platform to automatically deliver the optimal set of IO, memory, and CPU resources for each workload and usage scenario.

Snowflake uses a new multi-cluster, shared data architecture that decouples storage, compute resources, and system services. Snowflake’s architecture has the following three components:

- Storage: Snowflake uses a scalable cloud storage service to ensure a high degree of data replication, scalability, and availability without much manual user intervention. It allows users to organize information in databases, as per their needs.

- Compute: Snowflake uses massively parallel processing (MPP) clusters to allocate compute resources for tasks like loading, transforming, and querying data. It allows users to isolate workloads within particular virtual warehouses. Users can also specify which databases in the storage layer a particular virtual warehouse has access to.

- Cloud services: Snowflake uses a set of services such as metadata, security, access control, security, and infrastructure management. It allows users to communicate with client applications such as Snowflake web user interface, JDBC, or ODBC.

Because Snowflake does not tightly couple storage, compute, and database services — it can dynamically modify configurations and scale up or down resources independently. As a result, Snowflake’s unique architecture also makes it possible to handle all your data in one system. You don’t need to use specialized databases for different data formats.

Snowflake is also capable of automatically adapting resources to a particular usage scenario, so that users no longer need to manually manage resources.

Snowflake offers native support for semi-structured data

Snowflake also offers native support for all semi-structured data formats without compromising completeness, performance, or flexibility.

Relational databases assume that all data records consistently adhere to a set of columns that are defined by the database schema. This static data model offers advantages such as indices and pruning, but breaks down when incoming data records don’t follow a defined database schema.

Today, machine learning models automatically generate a large chunk of business data in semi-structured data formats like JSON and XML. Traditional databases often can’t handle these data records because they do not follow a specified database schema.

To deal with these limitations, data teams force-fitted semi-structured data into a schema. But this approach results in the loss of information and flexibility. Also, adding new fields to the schema caused the existing data pipelines to misbehave. As an improvement to this, some databases began to treat semi-structured data as a special complex object. But users could not easily search, index, or load these special objects. So, even this approach led to performance tradeoffs.

Snowflake’s VARIANT data type allows users to store semi-structured data records in a native form inside a relational table. Users can easily load semi-structured data into a table with Snowflake’s VARIANT data type. Users can use this schema-less storage option for all JSON, Avro, XML, and Parquet data records. This VARIANT data type allows users to load semi-structured data directly into Snowflake without defining a schema, losing information, or creating performance lags.

Snowflake also automatically discovers the attributes of semi-structured data. It identifies similar attributes across records and organizes those attributes in a way that provides better compression and data access.

Leveraging Snowflake to support business growth

Snowflake is a massively parallel processing (MPP), pay-per-usage cloud data warehouse that takes full advantage of the cloud. As such, Snowflake is quickly becoming the data system of record for many organizations. Companies across industries are deploying Snowflake to store data such as purchase records, product/SKU information, and more, and are also running reporting and ML modeling on top of that data.

Data stored in Snowflake is often valuable to business teams across marketing, product, and customer support, who look to use data to personalize the customer experience and understand customer engagement. But these business teams often don't have the technical expertise to navigate the data warehouse, and therefore rely on data teams to extract the data they need from the warehouse—a process that delays time to value and distracts from high-priority work.

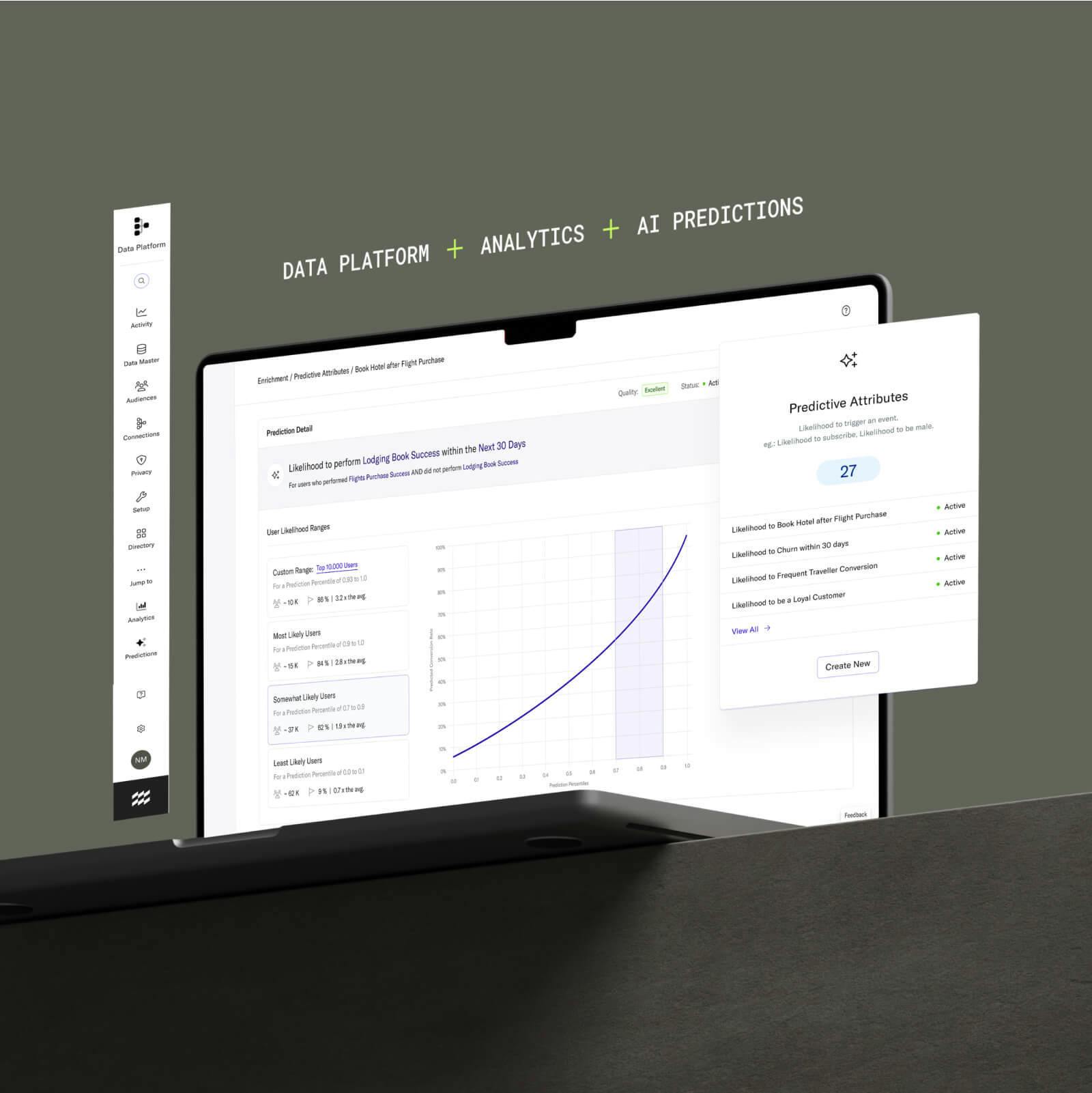

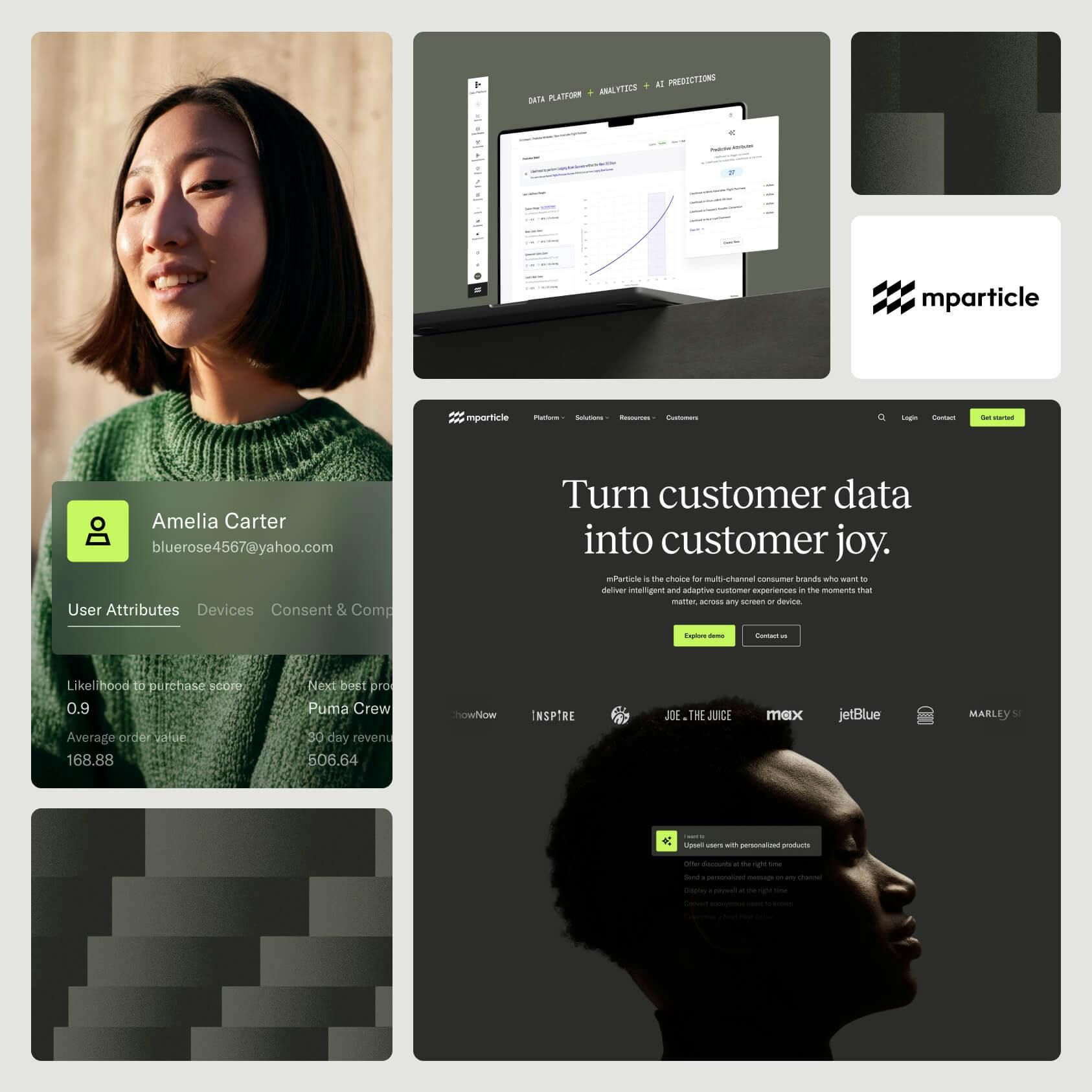

Solutions such as mParticle make it easy to ingest customer data such as in-store purchase records, calculated attributes, and user predictions from Snowflake into an accessible customer data infrastructure, where it can be activated by non-technical teams to support business initiatives. While Snowflake functions as the system of storage and analysis, mParticle functions as the system of movement in a company's data stack, making it possible for organizations to increase the ROI of their Snowflake deployment while also improving operational efficiency.