How to stop endless data shipping cycles

Engineers should ship products, not data. Product managers and marketers should experiment with data, increase personalization, and improve experiences. With a permanent data infrastructure, these goals are not mutually exclusive.

In today’s digital ecosystem, virtually every relationship is multi-channel. Customers regularly engage with brands across websites, apps, point of sale devices, smart TVs, gaming consoles, and many other touchpoints. Beyond sources of first-party data, companies also collect valuable information about their customers from third-party services like social networks, location providers, and many others.

The sheer magnitude of this data presents enticing and seemingly endless opportunities for companies to connect with customers and innovate on their products. Without getting this data into the hands of internal teams who can use it to power personalized experiences, guide long-term decisions, and inform product development, however, it is not meeting its potential.

Because data drives so many decisions within companies today, the number of internal stakeholders relying on access to current and complete views of the customer is greater than ever before. Keeping up with ever-changing data needs is a significant challenge that demands an extremely valuable resource in any company: the engineering team’s time.

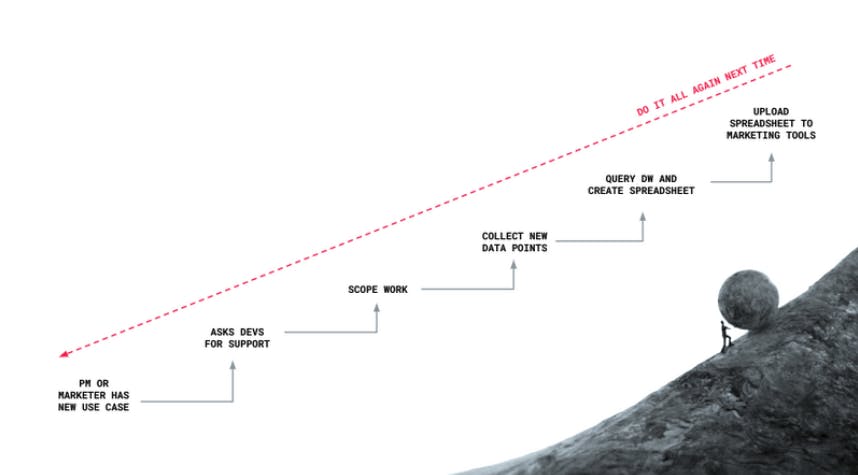

A data shipping cycle without Customer Data Infrastructure (CDI)

Let’s step through an example of how data requests typically play out between non-technical stakeholders and the engineers who capture and deliver them. First, some internal team––usually product management or marketing––has a use case for a specific subset of customer data, like sending targeted emails to customers who have made a purchase in the last 30 days. They want this email to include text, images, and links based on each recipient’s preferences.

When the engineering team receives this request, the first step is to determine the scope of the work by asking questions like:

- What data is needed to service this use case?

- Are we already collecting this data? If so, in what system(s) does it live?

- What identities are attached to the data, if any?

- Will any existing IDs allow the marketers/product managers to contact these customers the way they want to?

- Does this use case meet our data privacy obligations?

If new data points need to be collected, frontend developers may need to deploy new SDKs across the company’s apps, sites, and other touchpoints, and backend developers could be tasked with aggregating it from downstream systems. If the data exists in a location like a data warehouse, engineers or data scientists will likely have to write manual queries for that tool to retrieve the data, often in the form of a minimally structured document like a CSV or large data file. The engineers then need to upload the data to a third-party tool or hand it directly to the team that requested it.

Don't let data shipping cycles feel like eternal punishment in the depths of Hades.

When the cycle is complete, the boulder rolls to the bottom of the hill, and the engineers need to carry out the whole process yet again the next time the marketers or PMs have another request. Each time this cycle is performed, no progress is gained in making the next data retrieval effort more efficient. Without a robust customer data infrastructure, companies will remain in an endless holding pattern where one-off projects are constantly repeating themselves, and the hill is just as daunting at the onset of every data request.

Not only does this cause logistical pain points, but it also places a great strain on the relationships between developers and non-technical stakeholders. Engineers see time-intensive data requests from product managers and marketers as a considerable distraction from their primary focus––developing and improving upon the product’s core features and functionality. Furthermore, implementing new dependencies in their apps for data collection could result in slower performance and larger download sizes––something all developers work to avoid. From the marketing/product management perspective, engineers are a roadblock standing in the way of accessing and leveraging customer data to guide product enhancement and drive personalized customer experiences.

A path out of the data shipping cycle

There is a way out of the logistical bottlenecks and inter-team friction that comes from repetitive data shipping cycles, but it involves making some enhancements to your organization’s data infrastructure, such as:

- Standardizing your data collection. All customer data points coming from each of your company’s touchpoints need to adhere to the same schema at collection. That way, they can be unified with data from other sources.

- Create persistent user profiles. All data coming into the system needs to be unified with data from the same user, and added to a persistent customer profile.

- Make data accessible and actionable to non-technical stakeholders. Product and marketing teams need to be able to own and take action on the data coming into your system in a meaningful way, without enlisting the help of engineers.

- Replace manual queries with automated jobs. Common data retrieval processes should not require engineering support every time they are required.

With these pillars of a robust and permanent customer data infrastructure in place, your engineering, marketing, and product teams can begin to move in the direction of frictionless cooperation, and the company’s customer data can serve to mutually empower both teams.

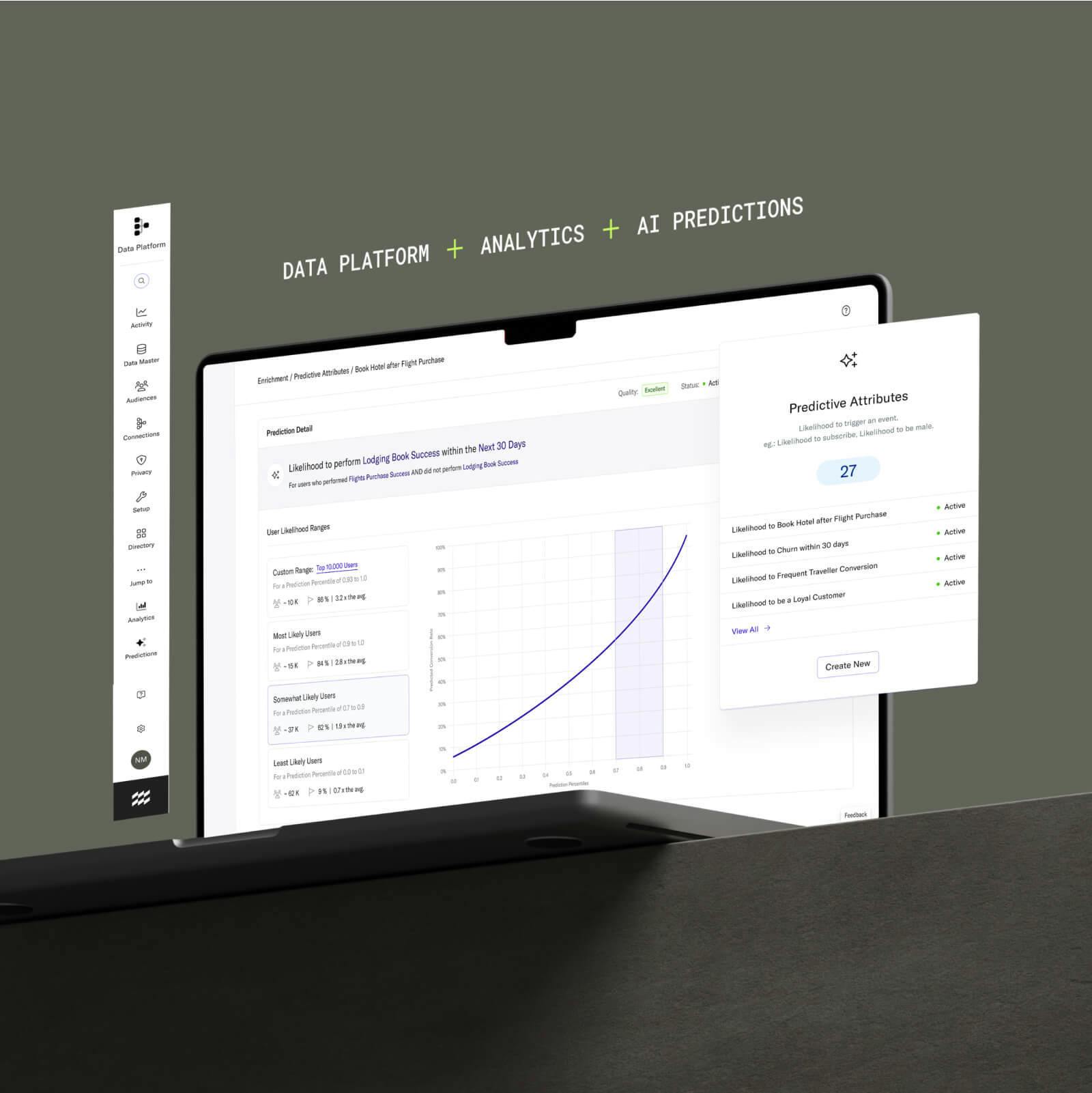

Of course, this begs the question of how to build data infrastructure that supports both engineering as well as growth teams. There is no single path to reaching this goal, but one way to jumpstart the effort is by placing a Customer Data Platform (CDP) at the heart of your organization’s data stack. CDPs first emerged around 2013 but began generating broad awareness around 2017. Generally speaking, CDPs are a centralized data infrastructure that aim to aggregate and make sense of a company’s customer data. More specifically, the five key functions of a CDP are data collection, data governance, data quality protection and profile unification, segmentation, and activation. It’s worth noting, however, that not all CDP vendors accomplish each of these functions to the same extent, and therefore the ways in which teams adopt CDPs is still varied. Here is a useful introduction to the CDP space and guide on what to consider when adopting a CDP.

CDPs have traditionally been understood to primarily benefit marketers and product managers. While CDPs do deliver profound benefits to these teams, developers can also realize considerable gains from adopting this powerful tool in terms of workflow efficiency, code clarity, and app performance. Here are some (but certainly not all) of the key developer wins that come from investing in permanent customer data infrastructure with a CDP:

Streamlined data integration workflows

Having a robust marketing stack often entails numerous third-party dependencies in your sites and applications. This adds clutter and complexity to codebases, and requires engineers to become familiar with a wide variety of API conventions to service data requests when they arise.

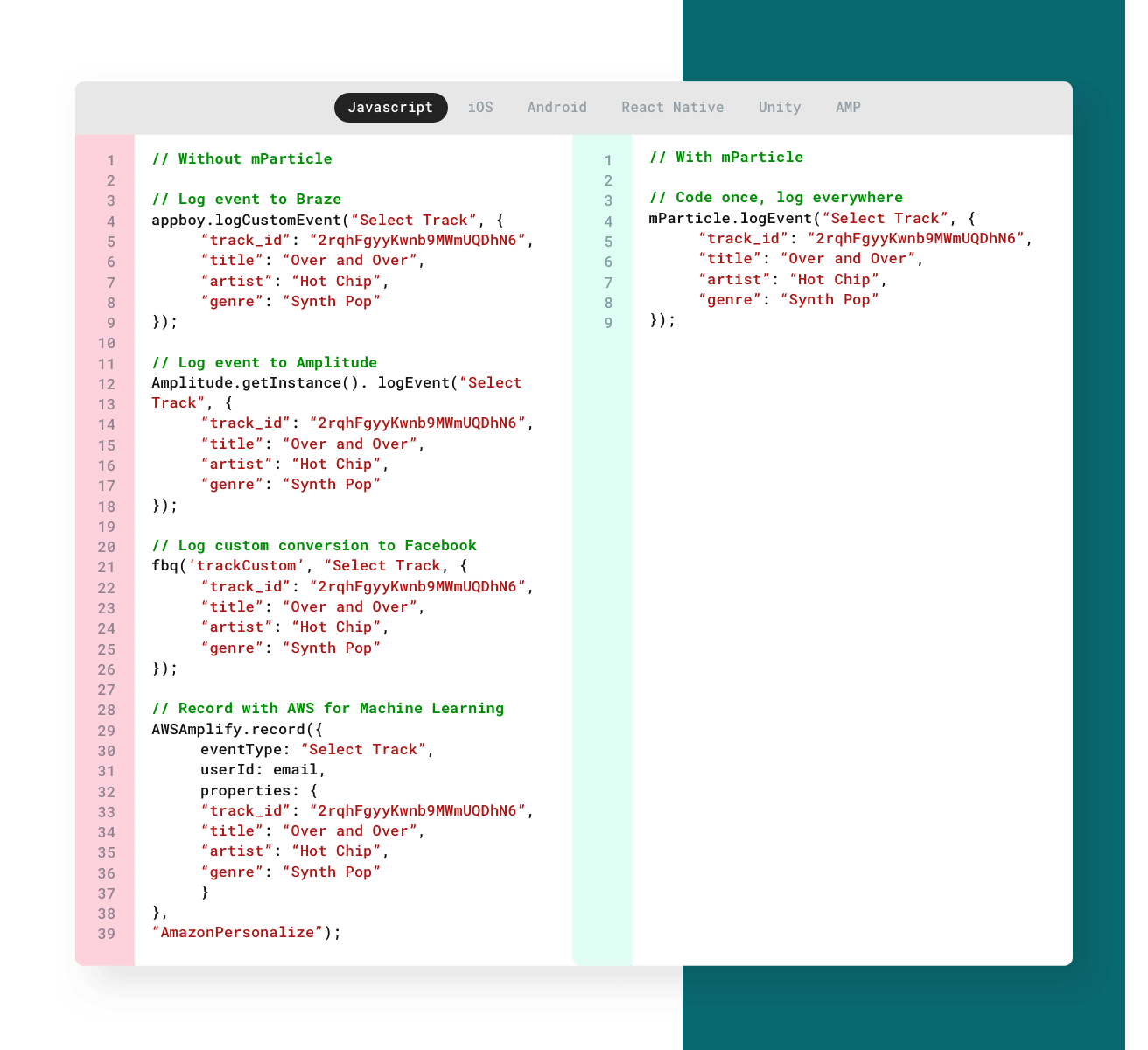

With a CDP like mParticle acting as a central hub in your data ecosystem, however, these individual dependencies can be replaced with a single SDK to collect a wide variety of events across each of your company’s touchpoints. In addition to platform-specific SDKs for web, iOS, and Android, mParticle’s development toolkit includes libraries for common cross-platform mobile development frameworks like React Native and Xamarin. On the back end, mParticle also delivers SDKs that let developers send data from any location using common server-side languages like Node and Python.

Clean up cluttered code with a single SDK for data collection.

No more one-off integration requests

Requests to add or remove integrations with third-party vendors are one of the most common triggers of data shipping cycles. A key benefit of a robust infrastructure CDP is direct integration with the most commonly used tools for advertising, analytics, engagement, A/B testing, segmentation, and a wide variety of other functions. These integrations allow marketing and product teams to easily add and remove vendors, and direct the flow of data without enlisting the support of engineers. mParticle, for instance, allows users to handle these operations directly in the dashboard. This gives marketers and product managers gain considerable freedom to perform A/B testing to see which vendors best meet their needs, and otherwise experiment with data integrations without needing to bring one-off requests to the engineering team.

For engineers, the most obvious benefit from this way of handling data is reclaimed time. The hours once spent manually dealing with single integrations can now be spent building core features and functionality of the company’s products. But even with a data infrastructure tool like a CDP in place, developers are not completely relieved of handling any and all data needs; there will still be occasion to track new events or make subtle changes to the data that is being collected on an ongoing basis. Since a CDP narrows the scope of this work significantly, however, these tasks can be handled by a less senior developer, whereas without a permanent data infrastructure, they demand input from seasoned engineers with intimate knowledge of the entire data stack.

Realize a permanent product from your data efforts

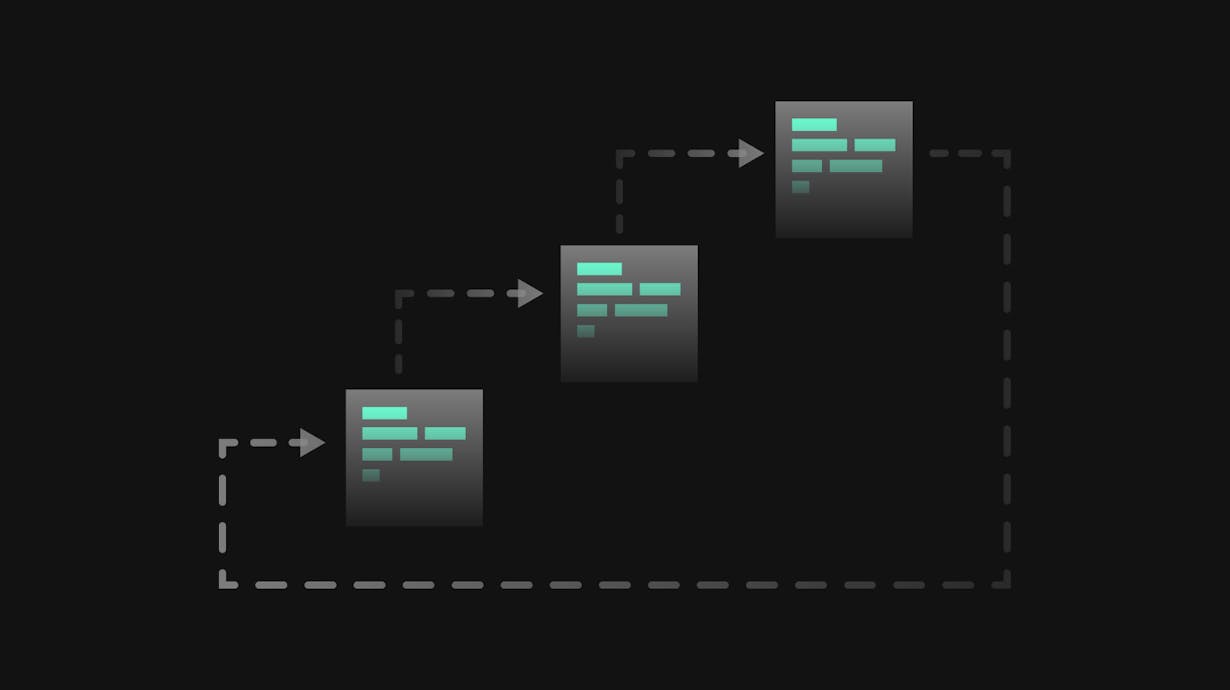

In the use case we explored above, the absence of a permanent data infrastructure meant that the only output of the engineering team’s work was a static spreadsheet or data file. From a developer’s standpoint, a single resource that starts going out of date as soon as it is generated hardly justifies the considerable effort required to produce it. By adopting a CDP, however, after an engineering team implements data collection across the company’s touchpoints with a single SDK, this project pays dividends in all subsequent needs to leverage the company’s customer data.

Let’s evaluate the email use case we examined before, only this time we’ll imagine the company in question is using mParticle in their data stack. With eCommerce events implemented across the company’s apps and websites, the engineering team would not need to collect or harvest data for this one use case––the data needed to create personalized emails is being collected on an ongoing basis. In addition, this data would consistently be used to update persistent user profiles, making it much more versatile and extensible.

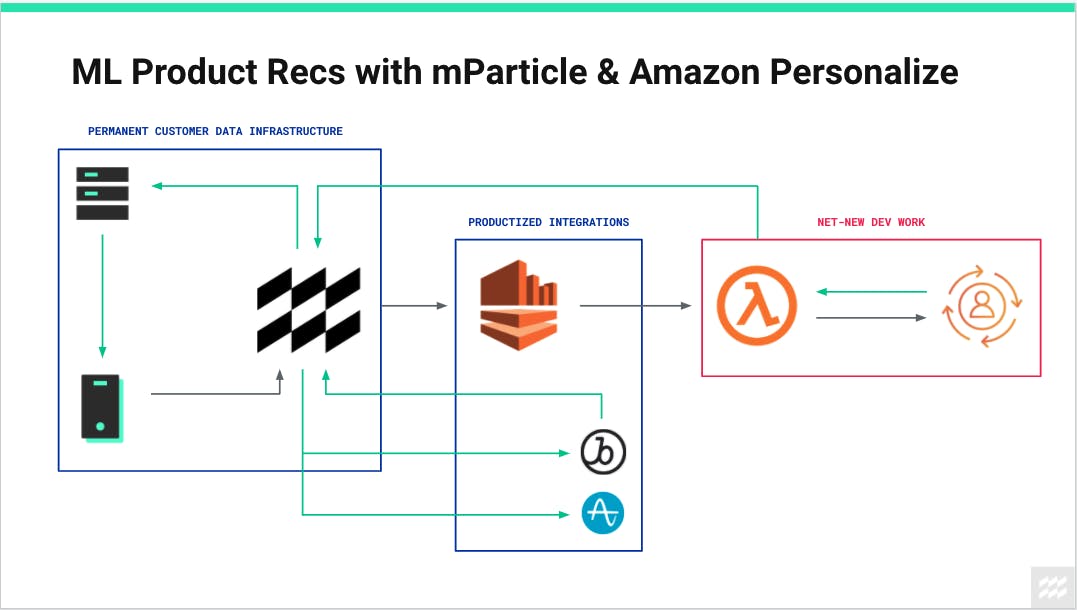

Instead of simply basing the recommendations in this email on static customer data points that only reflect a single point in time, we can take things a step further. For example, through mParticle’s direct integration with Amazon Kinesis, these user profiles could be fed through AWS tools like Lambda and Personalize to use a machine learning algorithm that generates up-to-date product recommendations for each user. Once these recommendations have been created, they can be sent back to mParticle and added to each user’s profile, where they can help drive personalization not only in one email use case, but on the company’s website, mobile apps, and other touchpoints as well:

How data use cases look with permanent data infrastructure in place.

Although having a permanent data infrastructure does not completely eliminate the need for developers to interact with data, we can clearly see that it puts a stop to endless data shipping cycles. In turn, engineering teams reclaim their time, and marketers and product managers are empowered to leverage their customer data in more effective and innovative ways. Finally, when engineers do have occasion to service data requests, they are no longer shipping static files with single-case utility––they are contributing to permanent data infrastructure that transcends individual use cases, and the boulder stays at the top of the hill.

Learn more about how mParticle’s data infrastructure features and developer tools like IDSync, Profile API, Smartype, and others can empower engineers and data stakeholders across your organization.